< Back to news

With examples such as the large-scale deployment of smart drones by Ukraine and the autonomous detection of missiles by Israel's Iron Dome system, we see a rapidly evolving landscape in which AI plays an increasingly important role on the battlefield. These developments bring new challenges, where traditional rules of war and international law may no longer be sufficient.

Kwik's research highlights the complexity of AWS and raises important questions about how these systems fit within the existing legal framework of warfare. The autonomy of these systems, their ability to make independent decisions, and the unpredictability of their actions all contribute to the challenge of applying traditional principles of responsibility.

This issue requires in-depth discussion and awareness, not only within military and legal circles, but also among the broader public. As we move into the future of warfare, we must continue the dialogue on leveraging AI's benefits without losing sight of our ethical principles and responsibilities.

The full article in Het Parool provides an in-depth analysis of these issues and is a must-read for anyone interested in the intersectionality of technology, ethics and warfare. It invites us to think about the future we want to shape and how we can navigate the challenges ahead with wisdom and prudence.

Read more here.

Published by Het Parool.

20 February 2024

Between Innovation and Ethics: AI-Driven Weapons Under the Scrutiny

At a time when artificial intelligence (AI) is gaining traction, we are at a critical crossroads where technological advances are challenging our traditional views on warfare.

The recent research of war law lawyer Jonathan Kwik, presented in an article by Het Parool, shines a spotlight on this complex issue, specifically focusing on the implications of autonomous weapon systems (AWS) and the need for human control.

With examples such as the large-scale deployment of smart drones by Ukraine and the autonomous detection of missiles by Israel's Iron Dome system, we see a rapidly evolving landscape in which AI plays an increasingly important role on the battlefield. These developments bring new challenges, where traditional rules of war and international law may no longer be sufficient.

Kwik's research highlights the complexity of AWS and raises important questions about how these systems fit within the existing legal framework of warfare. The autonomy of these systems, their ability to make independent decisions, and the unpredictability of their actions all contribute to the challenge of applying traditional principles of responsibility.

This issue requires in-depth discussion and awareness, not only within military and legal circles, but also among the broader public. As we move into the future of warfare, we must continue the dialogue on leveraging AI's benefits without losing sight of our ethical principles and responsibilities.

The full article in Het Parool provides an in-depth analysis of these issues and is a must-read for anyone interested in the intersectionality of technology, ethics and warfare. It invites us to think about the future we want to shape and how we can navigate the challenges ahead with wisdom and prudence.

Read more here.

Published by Het Parool.

Vergelijkbaar >

Similar news items

September 9

Multilingual organizations risk inconsistent AI responses

AI systems do not always give the same answers across languages. Research from CWI and partners shows that Dutch multinationals may unknowingly face risks, from HR to customer service and strategic decision-making.

read more >

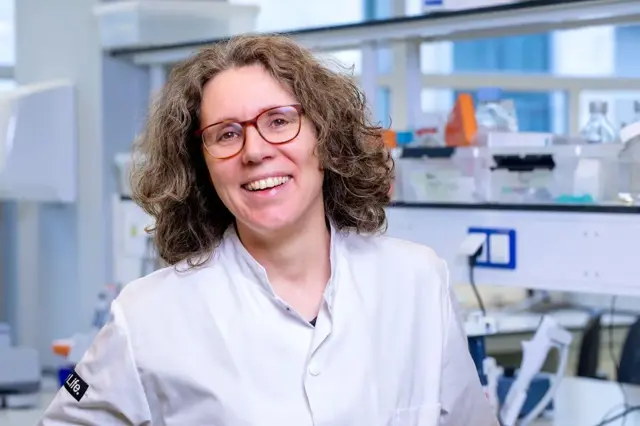

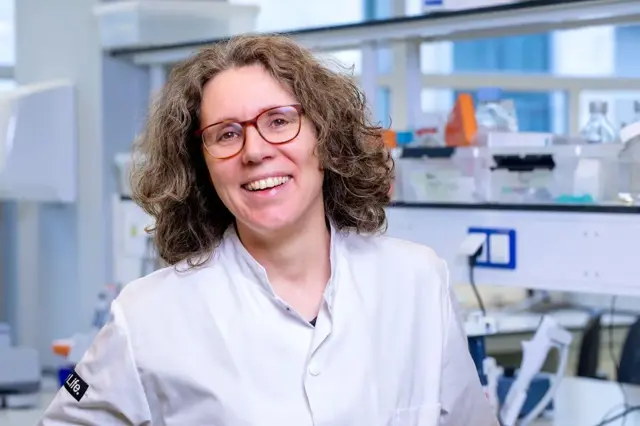

September 9

Making immunotherapy more effective with AI

Researchers at Sanquin have used an AI-based method to decode how immune cells regulate protein production. This breakthrough could strengthen immunotherapy and improve cancer treatments.

read more >

September 9

ERC Starting Grant for research on AI’s impact on labor markets and the welfare state

Political scientist Juliana Chueri (Vrije Universiteit Amsterdam) has received an ERC Starting Grant for her research into the political consequences of AI for labor markets and the welfare state.

read more >