< Back to news

19 November 2023

‘Independent scientists must take the lead in monitoring AI’

Experts from the UvA and Amsterdam UMC, two knowledge institutions for whom artificial intelligence is an important topic, today published so-called 'living guidelines' for responsible use of generative AI - such as the ChatGPT tool - in Nature.

Core principles in this regard are accountability, transparency and independent oversight. In doing so, they call for the immediate establishment of an independent oversight body made up of scientists.

Generative AI is a form of artificial intelligence that allows someone to automatically create text, images, audio and other content. Using it has advantages, but there are also disadvantages. There is therefore an urgent need for scientific and societal oversight in the rapidly developing field of artificial intelligence.

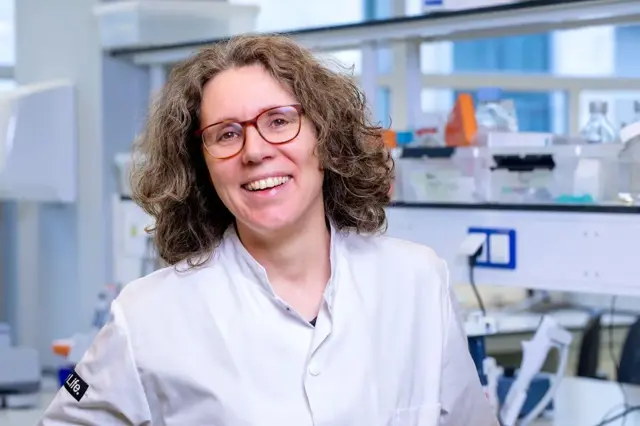

First author of the Nature article is Claudi Bockting, professor of clinical psychology of psychiatry at Amsterdam UMC and co-director of the Centre for Urban Mental Health. She argues that "AI tools can flood the internet with misinformation and 'deep fakes' that are indistinguishable from the real thing. Over time, this could erode trust between people, in politicians, institutions and science. Therefore, independent scientists should take the lead in testing and improving the safety and security of generative AI. However, most scientists now have limited ability to develop generative AI tools and cannot evaluate them in advance. They lack access to training data, facilities or funding.' The other authors of the guidelines are: Robert van Rooij and Willem Zuidema, from the Institute for Logic, Language and Computation at the University of Amsterdam; Eva van Dis from Amsterdam UMC; and Johan Bollen from Indiana University.

First author of the Nature article is Claudi Bockting, professor of clinical psychology of psychiatry at Amsterdam UMC and co-director of the Centre for Urban Mental Health. She argues that "AI tools can flood the internet with misinformation and 'deep fakes' that are indistinguishable from the real thing. Over time, this could erode trust between people, in politicians, institutions and science. Therefore, independent scientists should take the lead in testing and improving the safety and security of generative AI. However, most scientists now have limited ability to develop generative AI tools and cannot evaluate them in advance. They lack access to training data, facilities or funding.' The other authors of the guidelines are: Robert van Rooij and Willem Zuidema, from the Institute for Logic, Language and Computation at the University of Amsterdam; Eva van Dis from Amsterdam UMC; and Johan Bollen from Indiana University.

The guidelines in Nature were drafted after two summits with members of international organisations such as the International Science Council, the University-based Institutes for Advanced Study, the European Academy of Sciences and Arts and members of organisations such as UNESCO and the United Nations. This consortium's initiative offers a balanced perspective amid the slow pace of government regulation, fragmented guideline developments and the uncertainty as to whether large technology companies will engage in self-regulation.

Slow pace

According to the authors, monitoring is the task of a scientific institute. This institute should then focus mainly on quantitative measurements of effects of AI, both positive and potentially detrimental. Evaluations should be conducted according to scientific methods. Public good and the authenticity of scientific research take priority here, not commercial interests.

The 'living guidelines' revolve around three key principles:

- Accountability: The consortium advocates a human-centred approach. Generative AI can help with tasks without risk of major consequences, but essential activities - such as writing scientific publications or peer reviews - should retain human oversight.

- Transparency: It is imperative that the use of generative AI is always clearly stated. This enables the wider scientific community to assess the impact of generative AI on the quality of research and decision-making. In addition, the consortium urges developers of AI tools to be transparent about their methods to enable comprehensive evaluations.

- Independent oversight: Given the huge financial stakes of the generative AI sector, relying on self-regulation alone is not feasible. External, independent, objective monitoring is crucial to ensure ethical and high-quality use of AI tools.

- Independent oversight: Given the huge financial stakes of the generative AI sector, relying on self-regulation alone is not feasible. External, independent, objective monitoring is crucial to ensure ethical and high-quality use of AI tools.

Terms

The authors stress the urgent need for their proposed scientific body, which can also address any emerging or unsolved problems in the field of AI. However, there are some conditions before this body can do its job properly. The scientific body must have sufficient computing power to test full-scale models. And it must be given enough information about source data to assess how AI tools have been trained, even before they are released. It also needs international funding and broad legal support, as well as collaboration with technology industry leaders, while ensuring independence.

In short, the consortium stresses the need for investment in a committee of experts and an oversight body. In this way, generative AI can progress responsibly, balancing innovation and social welfare.

About the consortium

The consortium consists of AI experts, computer scientists and specialists in the psychological and social effects of AI from Amsterdam UMC, IAS and the Faculty of Science at the UvA.

Publication details

Living guidelines for generative AI — why scientists must oversee its use (nature.com), Claudi L. Bockting, Jelle Zuidema, Robert van Rooij, Johan Bollen, Eva A.M. van Dis, was published in Nature on 19 October 2023.

Photo credits: University of Amsterdam

Vergelijkbaar >

Similar news items

September 9

Multilingual organizations risk inconsistent AI responses

AI systems do not always give the same answers across languages. Research from CWI and partners shows that Dutch multinationals may unknowingly face risks, from HR to customer service and strategic decision-making.

read more >

September 9

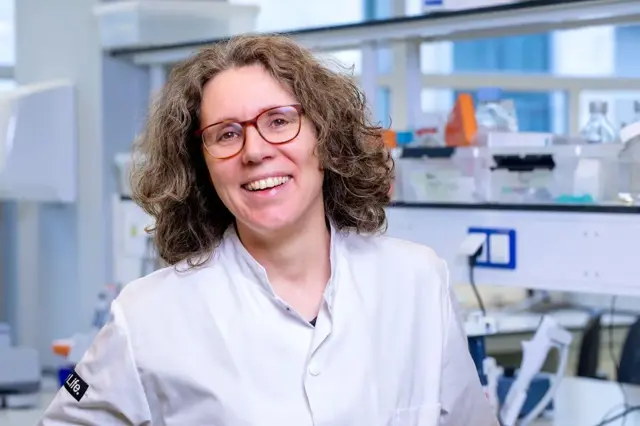

Making immunotherapy more effective with AI

Researchers at Sanquin have used an AI-based method to decode how immune cells regulate protein production. This breakthrough could strengthen immunotherapy and improve cancer treatments.

read more >

September 9

ERC Starting Grant for research on AI’s impact on labor markets and the welfare state

Political scientist Juliana Chueri (Vrije Universiteit Amsterdam) has received an ERC Starting Grant for her research into the political consequences of AI for labor markets and the welfare state.

read more >