< Back to news

6 December 2023

How do you humanise talking machines (but without the bad parts)?

This year, AI systems that can write almost human-like texts have made a breakthrough worldwide. However, many academic questions on how exactly these systems work remain unanswered. Three UvA researchers are trying to make the underlying language models more transparent, reliable and human.

The launch of ChatGPT by OpenAI on 30 November 2022 was a game changer for artificial intelligence. All of a sudden the public became aware of the power of writing machines. Some two months later, ChatGPT already had 100 million users.

Now, students are using it to write essays, programmers are using it to generate code, and companies are automating everyday writing tasks. At the same time, there are significant concerns about the unreliable nature of automatically generated text, and about the adoption of stereotypes and discrimination found in the training data.

The media across the world quickly jumped on ChatGPT, with stories flying around on the good, the bad and everything in between. ‘Before the launch of ChatGPT, I hadn’t heard a peep from the media about this topic for a long time,’ says UvA researcher Jelle Zuidema, ‘while my colleagues and I have tried to tell them multiple times over the years that important developments were on the horizon.’

Zuidema is an associate professor of Natural Language Processing, Explainable AI and Cognitive Modelling at the Institute for Logic, Language and Computation (ILLC). He is advocating for a measured discussion on the use of large language models, which is the kind of model that forms the basis for ChatGPT (see Box 1). Zuidema: ‘Downplaying or acting outraged about this development, saying things like “it’s all just plagiarism”, is pointless. Students use it, scientists use it, programmers use it, and many other groups in society are going to be dealing with it. Instead, we should be asking questions like: What consequences will language models have? What jobs will change? What will happen to the self-worth of copywriters?’

Read more here.

This article was published by the UvA.

The image was generated by the University of Amsterdam using Adobe Firefly (keywords: shallow brain architecture).

The image was generated by the University of Amsterdam using Adobe Firefly (keywords: shallow brain architecture).

Vergelijkbaar >

Similar news items

September 9

Multilingual organizations risk inconsistent AI responses

AI systems do not always give the same answers across languages. Research from CWI and partners shows that Dutch multinationals may unknowingly face risks, from HR to customer service and strategic decision-making.

read more >

September 9

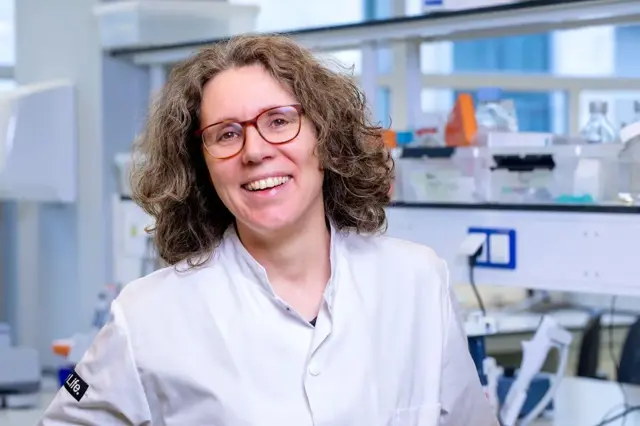

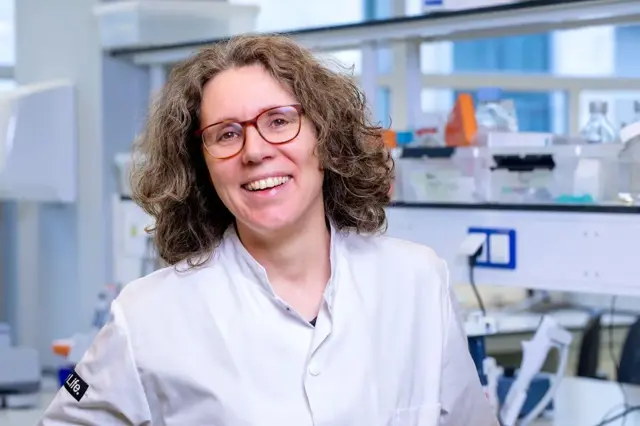

Making immunotherapy more effective with AI

Researchers at Sanquin have used an AI-based method to decode how immune cells regulate protein production. This breakthrough could strengthen immunotherapy and improve cancer treatments.

read more >

September 9

ERC Starting Grant for research on AI’s impact on labor markets and the welfare state

Political scientist Juliana Chueri (Vrije Universiteit Amsterdam) has received an ERC Starting Grant for her research into the political consequences of AI for labor markets and the welfare state.

read more >