< Back to news

5 October 2023

The vital importance of human-centred AI, a renewed call

Artificial Intelligence (AI) is undoubtedly the most influential systems technology today. AI is having a major impact on the way people interact with digital systems and services.

How should our society relate to this digital transformation? How do we strike a balance between freedom of action and the functional added value of AI? How do we safeguard public values, our fundamental rights and democratic freedoms? And how do we ensure that everyone can benefit from the positive effects of AI and not experience negative effects?

Impact of AI on people and society

AI offers many opportunities and can make a positive contribution to society and the economy. Think, for instance, of the accelerated development of medicines for rare diseases, facilitating sustainability and supporting professionals, freeing up more time for hands at the bedside and in the classroom. However, AI also has a downside.

It is extremely important that the AI application is developed without bias to avoid large-scale exclusion or negative assessment of people. Besides, can people still oversee how AI systems work, and take responsibility for decisions and actions they take based on AI? The level of citizen involvement in the implementation of AI within our society will determine how successfully we can live together with this technology.

Responsible deployment of AI

In the manifesto Human-centred AI, a renewed call for meaningful and responsible applications, the Dutch AI Coalition argues for full commitment to the development of human-centred AI with a learning approach.

"It is important to ensure that people working with AI technology have a good understanding of the points of interest and limitations. This means not only having good ethical and legal frameworks, but also supporting them with instructions, courses and training," said Irvette Tempelman, chair of the Human-centred AI working group. "As Europe, we are lagging behind the US and China in terms of AI development. That is why it is also so important to realise that we only have a limited option to go full throttle as is sometimes suggested. However, we can fully commit to responsible, human-centred AI."

Development and application of AI

How can we design and use AI systems to reap its benefits responsibly? How can we determine whether these systems use data responsibly? And how can we properly embed and use them socially? The importance of these questions is widely recognised. The huge positive and negative impact of AI on people and society brings with it a special responsibility. Both for the designers and developers of AI systems and for their applicators and users.

ELSA concept

The ELSA concept provides a good basis for the development and application of human-centred AI solutions. ELSA stands for Ethical, Legal and Societal Aspects. By using clear ethical and legal frameworks and helpful regulations to develop human-centred AI in a European context, actively involving stakeholders. Resulting in manageable socio-economic impacts of AI and trust in how AI works.

This pragmatic approach provides a solid basis to not only talk ethically about AI, but also to shape AI ethically. This is not just about the algorithms, but also how, where and by whom they are applied.

Interested?

The Dutch AI Coalition is developing several initiatives for the responsible and meaningful development of human-centred AI. Interested in learning more about the coalition's vision or the ELSA concept? Then visit the Working Group on Human-centred AI page or contact Náhani Oosterwijk for more information.

Vergelijkbaar >

Similar news items

September 9

Multilingual organizations risk inconsistent AI responses

AI systems do not always give the same answers across languages. Research from CWI and partners shows that Dutch multinationals may unknowingly face risks, from HR to customer service and strategic decision-making.

read more >

September 9

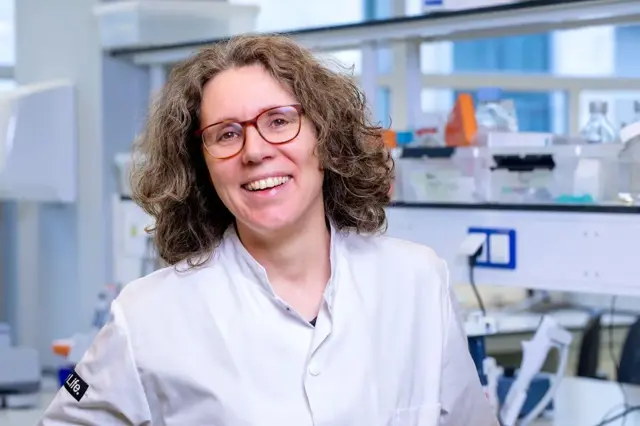

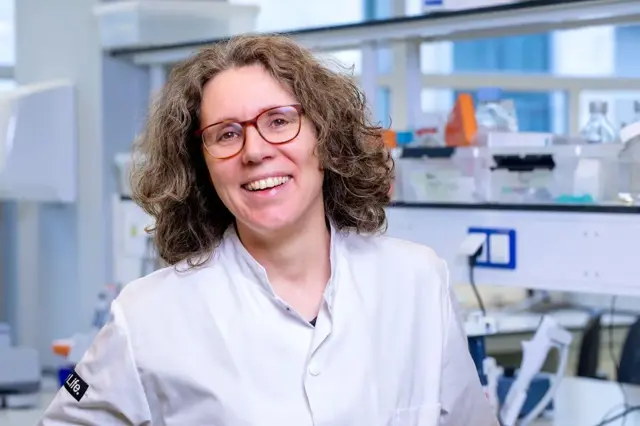

Making immunotherapy more effective with AI

Researchers at Sanquin have used an AI-based method to decode how immune cells regulate protein production. This breakthrough could strengthen immunotherapy and improve cancer treatments.

read more >

September 9

ERC Starting Grant for research on AI’s impact on labor markets and the welfare state

Political scientist Juliana Chueri (Vrije Universiteit Amsterdam) has received an ERC Starting Grant for her research into the political consequences of AI for labor markets and the welfare state.

read more >