< Back to news

4 December 2023

The battle of directions at OpenAI: 'Profit chosen over responsible rollout'

This week, OpenAI celebrated ChatGPT's first anniversary. But just last month, a major crisis seemed to put the company's future on the line. It exposed an internal directional battle that has not yet had its last word.

Think of it as a battle between accelerating the development of artificial intelligence (AI) or slowing down that development. "I think a lot of people believe that the faster the technology is developed, the sooner we solve some big problems," says Jelle Prins, co-founder of an AI startup. "You shouldn't want to slow that down too much."

Wim Nuijten, director of TU Eindhoven's AI Institute, is critical of what happened at OpenAI. "Profit-seeking seems to have won out over the responsible rollout of technology that can have a very big impact."

Smarter than humans

To realise what happened and what the significance is, it is first important to understand the ultimate goal of OpenAI. The company wants to move towards what we call 'AGI' (artificial general intelligence). Computers that are smarter than humans themselves.

If that is ever achieved - opinions differ quite a bit on that - it could change our entire society. It could bring much good to humanity, is the thought. But there are also concerns. With the ultimate fear being that AI will then determine what is best for us and, in theory, could even choose to exterminate humanity.

It may fuel fears that OpenAI is actually working on something that could potentially pose an existential risk. "Is it going to destroy all of humanity? I'm not afraid of that," responds Antske Fokkens, professor of Language Technology at the Free University. She is more concerned about the dangers that are already there, such as the spread of misinformation.

OpenAI CEO Sam Altman, who was suddenly fired last month, believes the positive effects will outweigh the negative effects. But not everyone at OpenAI agrees.

The face of the "countermovement" is Ilya Sutskever, a leading AI researcher and one of the company's co-founders. An article by The Atlantic reveals that he became increasingly convinced of the power of the technology OpenAI is developing. But with that also grew his conviction that the dangers are great. He was also the one who fired Altman on behalf of the board, only to regret it.

"A very important third direction is missing from this story," says Jelle Zuidema, associate professor of explainable AI at the University of Amsterdam. "Namely the group that doesn't want to talk about the existential danger, because they think that's exaggerated, but is definitely concerned about the effects it is having now."

An 'AI breakthrough

On top of that, there is plenty of speculation about whether OpenAI has achieved a technological 'breakthrough' this year, which could make the systems much more powerful. It is prompted by reports from The Information and Reuters. At issue is the breakthrough also called "Q*" (pronounced Q-star). Among other things, it would be able to solve mathematical sums that other AI variants find too difficult.

The supposed breakthrough would have left employees worried that there are not enough security safeguards. Researchers reportedly warned the board about this in a letter. It suggests that those concerns contributed to Altman's resignation, although technology newsletter Platformer reported that the board never received such a letter.

According to Nuijten of TU Eindhoven, it would be a breakthrough in how AI reasons, something that has been worked on for decades. Although he has a hard time estimating whether OpenAI is really already there. "It would then, based on the knowledge and rules we have about logic and the physical world, be able to prove certain theories and make new inventions."

In doing so, Zuidema notes that such news stories about technological breakthroughs can also serve another purpose, which is to convince the world that OpenAI is ahead of the competition.

Also struggle around new rules

All three experts agree that you cannot trust tech companies to develop AI without oversight. There needs to be regulation. This is being worked on in Europe, for example, although that is also a struggle in itself.

MEP Kim van Sparrentak (GroenLinks) is taking part in the negotiations and says it will be exciting. Germany, France and Italy do not want rules that are too strict, for fear of thwarting European companies. Van Sparrentak notes that in the meantime, there is robust lobbying by tech companies, including OpenAI.

This article was published by NOS (in Dutch).

Vergelijkbaar >

Similar news items

September 9

Multilingual organizations risk inconsistent AI responses

AI systems do not always give the same answers across languages. Research from CWI and partners shows that Dutch multinationals may unknowingly face risks, from HR to customer service and strategic decision-making.

read more >

September 9

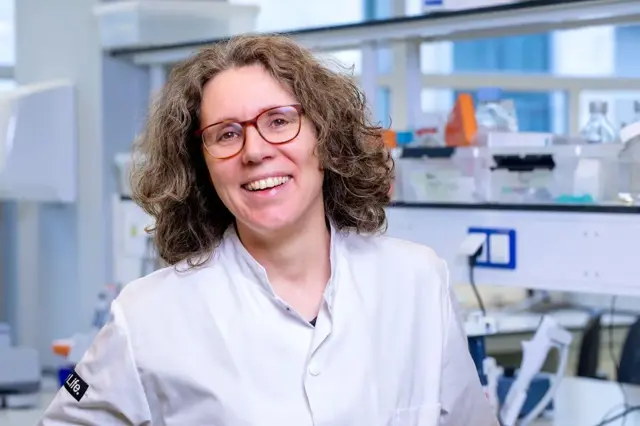

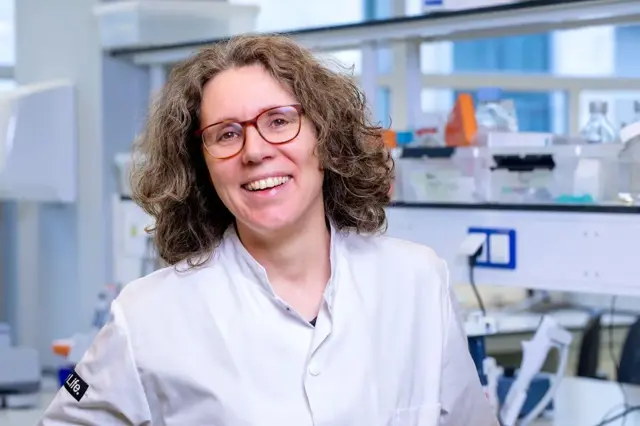

Making immunotherapy more effective with AI

Researchers at Sanquin have used an AI-based method to decode how immune cells regulate protein production. This breakthrough could strengthen immunotherapy and improve cancer treatments.

read more >

September 9

ERC Starting Grant for research on AI’s impact on labor markets and the welfare state

Political scientist Juliana Chueri (Vrije Universiteit Amsterdam) has received an ERC Starting Grant for her research into the political consequences of AI for labor markets and the welfare state.

read more >